Many devices, from televisions to smartphones, tout the ability to display HDR content, but the different formats and names can seem confusing. Here’s AppleInsider’s guide to what the different HDR video standards mean to you and your videos.

Over the years, HDR has grown to become as much of a feature to look for as 4K for televisions, or high-resolution screens for your Mac or other computing devices. But while the typical user has a vague idea that HDR can offer a better picture, the introduction of terms including HDR10, Dolby Vision, and HLG suggests there’s a lot more to picking hardware than first thought.

Fundamentally speaking, there’s not that much for the average person to worry about, as the vast majority of the time, they will still be able to watch the HDR content they want to watch.

Contents

What is HDR?

Short for High-Dynamic Range, HDR was originally a concept that applied to photography, with HDR images combining images of different exposures together to cover a wider range of colors and brightness levels than a single exposure would allow. For still images, this allows for images to show contents that would normally be too dark to be captured for a bright exposure to be seen, and vice versa.

For example, a standard image would allow for a well-exposed photograph of a cave entrance on a bright summer’s day at noon to still show things within the dark entranceway. HDR lets viewers see both the bright elements and the dark sections in higher detail than normal.

The same principle applies to HDR video, in that a video captured in HDR will allow for a wider array of luminosity at both bright and dark levels.

This need to control brightness of colors means the ability to manipulate light output sufficiently is an important factor of HDR, one that many people don’t really think about. This is because the brightness of a color can impact how it appears compared to the same color at a lower brightness.

Typical standard dynamic range content is mastered for a peak of 100 nits of brightness. HDR content, which needs to have more range of potential brightness levels for its color representation, can be mastered at a higher 1,000 nits, and for some formats, even higher levels.

At the same time, HDR video also expands the color palette, allowing for more colors to be used to display an image, one that can be brighter and more vivid to the user. Combined with the luminosity, the higher contrast levels, and generally better image fidelity, HDR video can provide a considerably better image than the normal standard video formats.

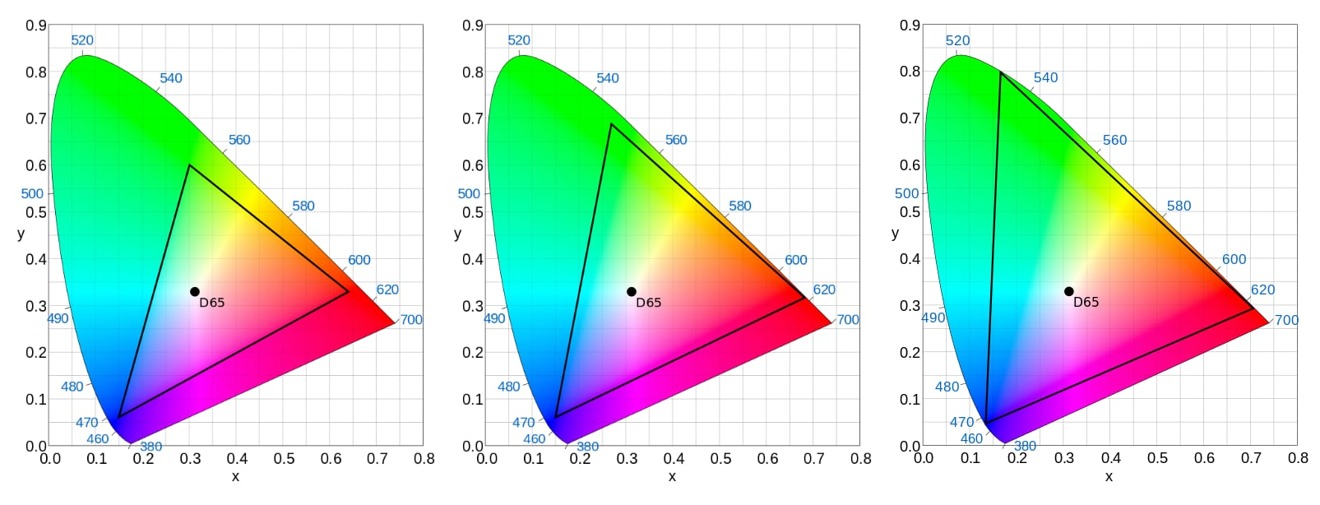

More specifically, a normal HD video will use an 8-bit specification, which the International Telecommunications Union refers to as Rec.709 or BT.709, while the 10-bit and 12-bit pictures of HDR content adheres to Rec.2020 or BT.2020, which occupies a far wider color gamut.

Color gamut charts showing coverage areas for SDR (Rec-709, left), a DCI-P3 display (middle), and a HDR display (Rec-2020, right)

In effect, a standard dynamic range image may only have approximately 16 million colors to use to create a picture, whereas any HDR video format that is 10-bit or higher has over a billion to play with.

Format Conundrum

Just as is the case with any major new technology, such as VHS or Betamax or Blu-ray versus HD-DVD, there’s a selection of standards or formats that are battling for dominance in the industry. For the purposes of this explainer, we will be sticking to the main ones being consumed by the public: HDR10, HDR10+, Dolby Vision, Advanced HDR, and HLG.

HDR10

Of the four, HDR10 is by far the most common standard anyone will encounter when they look into HDR video. As it is an open standard that was developed by device producers and free to use by hardware producers without paying licensing fees to a holding company, HDR10 is found on practically any piece of modern hardware offering HDR capabilities.

Its ubiquity doesn’t necessarily translate into being the best on the market, due in part to being made to be as accessible as possible to hardware producers.

For example, content can be mastered with a peak brightness of 1,000 nits, which is easily beaten by other standards, but still twice the brightness of SDR standards. The 10 in the name also refers to its 10-bit color depth support, something that is, again, lacking compared to some of its 12-bit rivals.

The HDR10-supporting LG UltraFine Ergo display with a MacBook Pro

HDR10 also uses “static metadata” rather than “dynamic metadata.” While static metadata will provide a single defined picture brightness curve that applies to the content as a whole, dynamic metadata can provide this same curve for each individual shot or scene, allowing for a perfectly optimized image throughout a constantly changing video.

Given the limitations of HDR10, as well as its ubiquity and the lack of enforcement for an open standard, the need for manufacturers to make their displays better than competitors for the same content source also means that there’s quite a variation between HDR10-compatible devices in how the format is interpreted and implemented. Two screens can call themselves HDR10 compliant, but offer completely different results.

Despite these seeming limitations, HDR10 still provides a better image overall than SDR, so it’s not to be sniffed at. It just means that, if a better supporting standard is available, the hardware will use that for HDR content where possible, but it will almost certainly fall back to HDR10 if the support for the other standard isn’t available for that particular video.

HDR10+

Just to confuse matters, there’s also HDR10+, which is championed by Samsung, Panasonic, 20th Century Fox, and Amazon. Building on top of HDR10, HDR10+ makes changes to the format to include dynamic metadata and supports mastering up to 4,000 nits, enabling it to have a brighter and more optimized image throughout a movie.

Just like HDR10, HDR10+ is also an open standard, which means device producers can include support without paying licensing fees or royalties. This also means it falls into the same trap of variations between devices in terms of implementation.

After a slow start, HDR10+ has been gaining support from other producers, with the total number of adopters as of January 2020 reaching 94, which is still a seemingly low figure given the number of AV-related companies exist on the market.

Dolby Vision

Created by Dolby as the name implies, Dolby Vision is the company’s take on HDR standards, one that also attempts to go far beyond what modern televisions are capable of displaying, enabling it to be somewhat futureproof.

For a start, the standard opts for 12-bit color instead of 10-bit, which significantly increases the number of colors available from a billion to over 68 billion. To viewers, this effectively means there will be barely any visible banding of colors that may still appear in 10-bit standards and SDR, and in turn can produce a closer-to-life picture.

An example of how Dolby Vision can change the appearance of an image.

Dolby Vision is also able to be mastered at higher levels, with content currently mastered at around 4,000 nits, but with a theoretical maximum support of 10,000 nits, and with dynamic metadata too. At this point in time, this is an academic maximum, as it is many multiple times higher than the brightness capabilities of modern TVs.

Unlike HDR10 and HDR10+, Dolby Vision isn’t an open standard, as companies have to pay a license to create content or hardware that uses it. Furthermore, hardware has to be approved by Dolby before it can support the standard in the first place, and that could require building with specific minimum capabilities, which can raise the cost of production.

This does offer a benefit to consumers of ensuring there’s some level of uniformity between devices that support Dolby Vision. While there may still be some differences due to component capabilities, such as brightness or contrast, the Dolby approval means it won’t be wildly too different from what anyone familiar with Dolby Vision will expect to see.

Dolby Vision support is offered on a wide range of devices, with it being more popular than HDR10+ among device vendors, despite the additional cost.

HLG

Developed by the BBC and NHK, Hybrid Log Gamma is a free-to-use format that is geared more towards broadcast use than for typical streaming video services or for using local media.

The way that HLG functions makes it attractive to broadcasters, as it is a HDR standard that is backwards compatible with SDR televisions. For consumers that means little, but for broadcasters, this means only one video signal needs to be broadcast, rather than needing separate HDR and SDR feeds.

The BBC’s tests of HLG included broadcasts of Planet Earth II.

The trick HLG uses is in the use of multiple gamma curves used to dictate the brightness of an image. Two gamma curves are provided, with a standard curve usable by SDR screens to show low-light content, but the addition of a logarithmic curve for brighter sections of an image allows HDR screens to adjust those areas more appropriately.

HLG is limited to a 10-bit depth which puts it on a par with HDR10 in terms of color gamut.

While it is unlikely HLG will be used for general online content distribution, the hybrid nature of the standard makes it attractive to services offering live broadcasts, such as online versions of TV channels.

Many televisions already offer support for HLG, allowing them to support future broadcasts that take advantage of the standard, though it seems there may still be some time before HLG becomes widely used.

Advanced HDR

Developed by Technicolor, Advanced HDR actually covers three HDR formats instead of one.

Single-Layer HDR1 (SL-HDR1) is a broadcaster-centric version that is similar to HLG, in that it combines SDR and HDR-compatible content in a single stream. In effect, the SDR signal has added dynamic metadata that allows for it to be converted into a HDR signal for viewing, albeit without the quality of using higher bit rates.

SL-HDR2 is quite similar in concept to HDR10, but with the addition of dynamic metadata for improved scene-to-scene image quality. Unlike SL-HDR1, there is no SDR-compatible version, making it less desirable for broadcasters.

Little is known about SL-HDR3, but it is believed to use Sony Hybrid Log Gamma combined with dynamic metadata.

While Advanced HDR isn’t really used that much, except for test broadcasts like HLG, there is still the potential for it to be adopted widely by the industry. Advanced HDR’s SL-HDR1 and SL-HDR2 are both included in the ATSC 3.0 standards, an in-development tome which will help dictate how TV channels are broadcasted in the future.

Monitors and DisplayHDR

While HDR standards and support are relatively well known and understood for televisions, things are somewhat different when it comes to monitors. Generally speaking, a monitor will support HDR10 at a minimum, but there is more differentiation at play than simply what standards work with a screen.

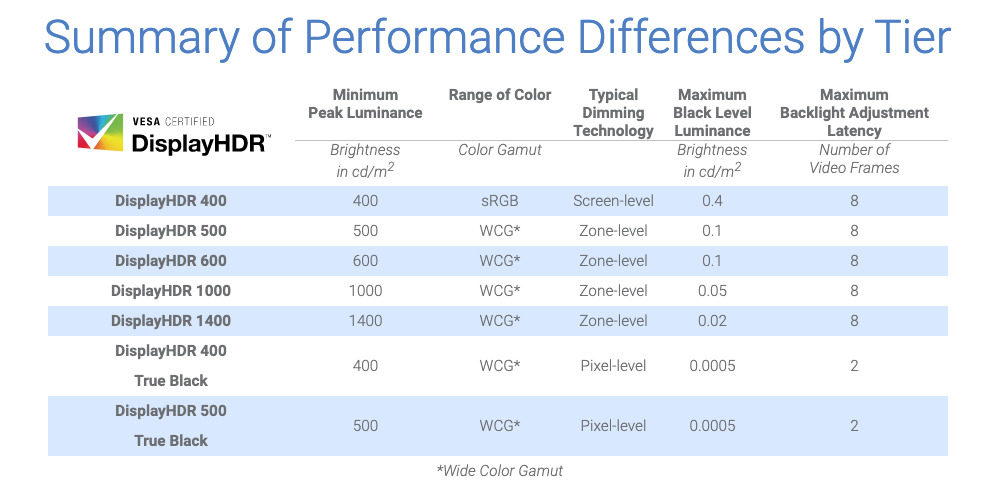

DisplayHDR and the companion DisplayHDR True Black are high-performance monitor and display compliance test specifications from VESA that it calls the display industry’s first open standard for specifying HDR quality. It is used to measure elements including luminance, color gamut, bit depth, and other elements.

DisplayHDR’s required specifications for different performance tiers.

The idea is that a monitor is tested and given a certification at one of a number of performance tiers, depending on if it meets specific criteria. The tiers include Display HDR 400, 500, 600, 1000, and 1400, as well as 400 True Black and 500 True Black, with True Black reserved for OLED displays.

The performance criteria covers the minimum luminance levels, black levels, active dimming in a checkerboard, color gamut and minimum luminance tests, minimum bit depths, the “rise time” between black to maximum luminance, and white point accuracy. The better the display performs, the higher up the tiers it can go, and the manufacturer can put a logo on the box for the monitor declaring its certification.

VESA also offers a list of all monitors and notebooks that have passed its DisplayHDR certifications, sorted by level.

The DisplayHDR testing is purely voluntary for the manufacturers to undertake, and due to only being introduced at the end of 2017, the list of certified displays isn’t exhaustive of the entire industry. As monitor producers can still sell displays as having HDR support without any of the testing, it is best to treat DisplayHDR as an additional guide when selecting a monitor to buy.

For example, the LG Ultrafine Ergo 32-inch monitor doesn’t have a DisplayHDR certification, but it still has support for HDR10. Its peak brightness of 350 nits may make a HDR10 video appear differently from a television that may be able to produce more light and is closer to the 1,000-nit peak brightness HDR10 supports.

Also, remember that there’s nothing stopping you from simply plugging a Mac into a television, which may have more chance of having Dolby Vision support.

Apple’s HDR video support

Apple largely offers support for two HDR standards for video playback, with HDR10 or Dolby Vision generally offered on compatible hardware.

For iPhones, all iPhone 11-generation models are capable of playing back HDR10 and Dolby Vision content, along with the second-generation iPhone SE, iPhone XS, iPhone XR, iPhone X, and iPhone 8.

On iPad, support extends from the second-generation iPad Pro onwards, but not other iPad models.

Apple offers a support page offering the combinations of Mac or MacBook with HDR support and whether they are connected to an external display or using an internal display.

For the iMac Pro, 2018 MacBook Air or later, and the 2018 MacBook Pro or later models, HDR is available on the built-in displays. The 2018 and later MacBook Pro, iMac Pro, 2018 Mac mini, and 2019 Mac Pro all have HDR support when connected to compatible external displays, while the MacBook Air only offers SDR support.

The Apple TV 4K is where most people’s thoughts will turn to when considering watching HDR content.

The Apple TV 4K is the only model to offer HDR playback, with both HDR10 and Dolby Vision support, though it does require a television with 4K support as well. The Apple TV HD does not support HDR in any form.

Within Apple’s digital storefronts, Apple sells movies and TV shows in HDR, with some additionally supporting Dolby Vision. Both are identified with logos for either HDR or Dolby Vision.

Not an HDR format war

Unlike previous format wars, where a winner must be decided, we are instead in a situation where multiple standards can coexist. For many, this will take the form of either HDR10+ and HDR10 or Dolby Vision and HDR10, with HDR10 being the back-up to the better-quality version.

While HLG and Advanced HDR are nice to consider, they are not essential at the moment. Given their broadcaster-centric leanings, it’s probably going to be more likely that users will see support for the formats in televisions, but not necessarily anytime soon in smaller devices.

For those who are serious about home cinema, the allure of Dolby Vision will mean anyone invested in the Apple ecosystem on many devices will enjoy support for the higher-quality format. Given the wide support of it with televisions, all they require is an Apple TV 4K to enjoy Dolby Vision in many cases.

The content available in Apple’s digital storefronts, as well as the plethora of streaming services that support both Dolby Vision and HDR10 also means there is more than enough HDR content out there to consume. Even if it’s HDR10, it’s still better than what you would see in a non-HDR film or TV show, and for all but the pickiest, that’s good enough for them.